If you’ve read some of my previous articles, then you’ll know that I enjoy presenting some of the less well known Machine Learning algorithms. And, while they’re not completely out of the mainstream, today I’ll be exploring Genetic Algorithms. Firstly, I’ll break down the algorithm and then I’ll show how you can train an ML model using a Genetic Algorithm for optimization. Let’s get started.

Despite the impressive sounding name, Genetic Algorithms (GAs) are actually very simple. GAs are an optimization algorithm mirroring what occurs in nature – think fitness, natural selection and mating.

Genetic Algorithm optimization is particularly useful for complex, hilly or discrete loss surfaces where Gradient Descent methods may have some trouble. Of course, there are other optimization algorithms also capable of solving these problems e.g Simulated Annealing, Swarm methods, Bayesian Optimization but we’ll just cover GAs.

Methodology

The Genetic Algorithm works as follows:

- Initially, a population of potential solutions is generated randomly. This can be done by drawing samples from a distribution around the expected range of the parameters. The initial population could be considered analogous to species as in evolution.

- Evaluate the fitness of each member of the population. Essentially this is equivalent to the loss function.

- Select a number of parents and “mate” them in a process called crossover. Combine their model parameters (genes) into new combinations.

- Apply random mutation to a certain percentage of the genes.

- Run the algorithm for multiple generations.

As you can see, this process is similar to evolution, hence the name. Over many generations (iterations), the new population is driven towards a more and more optimal solution.

Advantages of Genetic Algorithms

GAs are able to explore a broader solution space, which is useful in navigating complex, noisy and/or non-convex optimization landscapes. Unlike gradient-based methods, genetic algorithms are less prone to getting stuck in local minima, enabling exploration of more diverse and potentially optimal solutions.

More importantly, GAs are resilient to discontinuous and non-differentiable problems where gradient-based methods struggle.

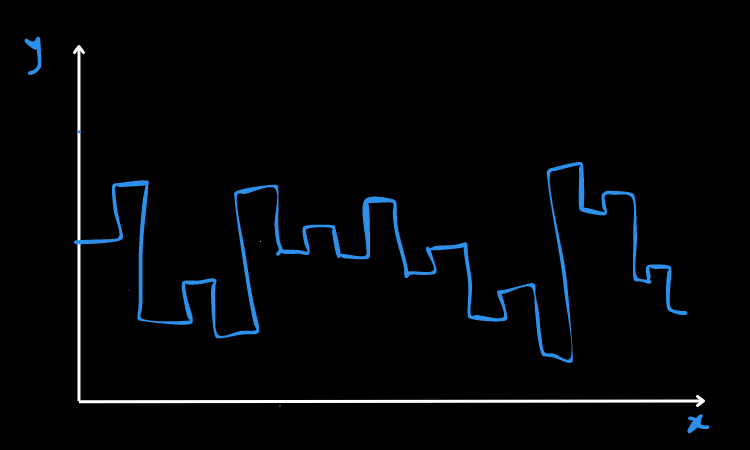

For example, consider the incredibly drawn function below . The function consists of abrupt transitions and steps with undefined gradients.

Modern Neural Networks are trained via the backpropagation algorithm and while the details are beyond the scope of this article, many Neural Network implementations such as PyTorch therefore require the use of differentiable functions i.e. with a gradient.

Actually, it is possible to make many discretized problems differentiable using a variety of tricks e.g Binary Cross Entropy, Gumbel-Softmax. However, you may just want an algorithm that can handle such problems out of the box. For example, in Reinforcement Learning where the agent needs to choose from a discrete set of actions at each step.

Disadvantages of Genetic Algorithms

Despite having their niche, GAs are not among the dominant optimization algorithms for training neural networks. This is down to the following reasons:

- Speed: GAs are slow, especially for complex problems or when the search space is vast. They need on a population-based approach, require a lot of evaluations, and rely on random mutations which makes the optimization process somewhat undirected.

- Convergence: Due to the semi-random nature of the search, GAs can struggle to find the absolute optimal solution. They excel in finding a ‘good enough’ in complex loss landscapes, requiring minimal assumptions, but can struggle to make the last fine grained adjustments.

- Tuning and Encoding: GAs have various parameters to tune, such as population size, mutation rate, crossover rate, etc. Finding the right combination of these parameters for a specific problem can be challenging and often requires empirical testing.

- Computational Cost: Operating with a population of potential solutions can be computationally expensive, as the fitness function needs to be evaluated many times. Algorithmically, it is less efficient that other potential optimization methods.

Training a PyTorch Model with a Genetic Algorithm using PyGAD

Now that we’ve covered the theory, lets dive into the practice.

In the following code, I will show you how you to train a PyTorch model, using a Genetic Algorithm to learn the optimal set of parameters . For the sake of simplicity I am using a template PyTorch model – feel free to add you own code and customize.

For the GA itself I will use the excellent PyGAD library. PyGAD provides a wrapper and handles the population, mutation and crossover, making training very straightforward.

The first step is to define your model. As mentioned, I’m using an example PyTorch Model but PyGAD is flexible enough to allow other options as well.

class ExampleModel(nn.Module):

def __init_():

super(ExampleModel, self).__init__()

# Your code goes here

def forward(x):

# Your code goes here

return y

def loss(y_p, y):

# Example loss RMSE

l = torch.mean(torch.sqrt((y_p - y)**2))

return l

# Instantiate

model = ExampleModel()

Next, we have to define the fitness function. The fitness function is analogous to the loss function in the typical ML nomenclature. Using PyGAD, we can load the solution’s parameters into the PyTorch model’s dictionary. From there, we run a forward pass of the model and calculate the loss. Notice, that we don’t call the backward pass – we are bypassing the backpropogation.

import torch

import pygad

from pygad import torchga

def fitness_func(ga_instance, solution, sol_idx):

global X, x, y, torch_ga, model

model_weights_dict = torchga.model_weights_as_dict(model=model, weights_vector=solution)

# Load the newest solution into the model parameters

model.load_state_dict(model_weights_dict)

y_pred = model(x)

l = model.loss(y_pred, y)

l = l.detach().numpy()

return -loss

Now we instantiate the GA optimizer and set up the parameters. The key parameters are:

- num_solutions: The number of evaluations (potential solutions) per generation

- num_generations: The number of times to run the algorithm, more generations tends towards better optimization

- num_parents_mating: The number of parent solutions to consider for the crossover process.

- num_genes: this should match the number of parameters

PyGAD also has additional options for fine grained control. Check out the documentation for more information.

torch_ga = torchga.TorchGA(model=model,

num_solutions=50)

num_generations = 150

num_parents_mating = 5

initial_population = torch_ga.population_weights

ga_instance = pygad.GA(num_generations=num_generations, num_parents_mating=num_parents_mating, initial_population=initial_population,

sol_per_pop=50,

num_genes = len(initial_population), fitness_func=fitness_func,

on_generation=callback_generation)

Turn off Torch gradients globally and then begin training with ga_instance.run().

torch.set_grad_enabled(False)

# Start the genetic algorithm evolution.

ga_instance.run()

Finally, upon completion we can load the best solution and check out the model fit/predictions.

solution, solution_fitness, solution_idx = ga_instance.best_solution()

best_solution_weights = torchga.model_weights_as_dict(model=model,

weights_vector=solution)

model.load_state_dict(best_solution_weights)

y= model(X)

PyGAD also has some automated plotting features to check the fitness evaluation after each generation. Below is one that I prepared earlier, we can see that the algorithm steadily climbs to an optimum through the generations.

Summary

In this article, we’ve explored the background of Genetic Algorithms, the methodology and finally, the practice. While niche, GA’s are a useful option to have in the toolbox, particularly for problems with discrete, noisy or complex loss surfaces. Next time you run into such a problem, consider GAs!

Thanks for reading the article, to finish the article I leave you with some interesting papers containing Genetic Algorithms below.

Genetic Algorithms: Some Interesting Research

- Deep Neuroevolution: Genetic Algorithms are a Competitive Alternative for Training Deep Neural Networks for Reinforcement Learning

- AI Programmer: Autonomously Creating Software Programs Using Genetic Algorithms

- Evolutionary Reinforcement Learning: A Survey

Leave a Reply